The Gullibility of Humans

In hindsight, it’s no surprise that the government created a Disinformation Governance Board. It is a natural escalation in the battle over social media mis/dis/information — a topic that has never strayed far from the public eye for several years now. Just in the last month two massive stories erupted onto the scene; Elon Musk’s pending Twitter buyout, and the confirmation of Hunter Biden’s laptop story. Before that, it was several years worth of Covid and election related misinformation stories.

Mis/dis/information on social media is a real problem. It's problematic that honest ideas are censored, it's problematic that dishonest ideas spread.

My contribution won’t be to provide advice on what might be done about this problem (although a government appointed governance board seems like it’s decidedly not the answer). I’ve already weighed in on why I think Free Speech, as a guiding principle, is vital, which is as far as I'm willing to step into the solution space. This essay will specifically focus on why people are susceptible to believing things that aren’t true. Something I think is fundamental to the problem. After all, if people are able to easily separate fact from fiction, misinformation is an unfounded concern.

Before we begin, I want to define truth in the context of this essay, and define how I think it is defined in the broader national conversation around mis/dis/information too.

Truth is a claim about objects and events in the real world. This includes scientific truths, observations about who did what, or who said what, and when. The truth describes what is or was. These are also called empirical truths, because they rely on direct observations of the world. I want to make especially clear that truths are not statements of morality, and are not judgements of what is right, just, or fair. Different opinions of morality are expected in a functioning society, but a well-functioning society should ideally be in agreement about the nature of reality. The reason I think social media misinformation is such an energetic topic is because it relates to a different understanding of the nature of objective reality.

With that out of the way, back to the original question.

Why are humans so easily tricked into believing things that aren’t true? Why are we so gullible?

The short, and unsatisfactory answer, is that humans are bad at independently determining the truth. But let’s dig deeper...

Geography class

Imagine for a moment that you are once again attending your first day of 8th grade. You shuffle down the hallway seeking the room number on your schedule which corresponds to your first class of the day, Geography. You arrive on time and find a seat in the back of the class, the room is warmer than you’d prefer, it’s still late summer, and the air conditioning is struggling to keep pace.

The class begins five minutes later, promptly at 8:00am, the hubbub of students exchanging summer stories dies down, and the teacher passes around a stack of world maps. Perhaps a little cliché for a first Geography class, but stay with me.

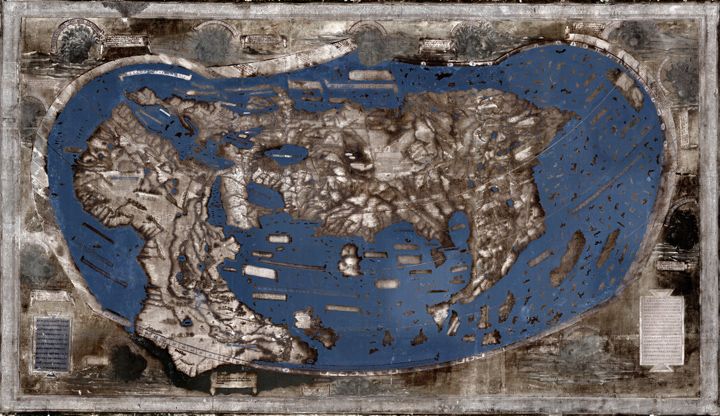

These aren’t just any maps, these are maps made by the best cartographer in Europe— arguably the best in the world.

The pile of the maps finally reaches your seat and you take one. But when you look down, the map looks a bit strange. Not just strange, it looks wrong, like it was drawn by a 4 year old. Strangely, no one else looks the slightest bit concerned about this obviously wrong map of the world. They just accept it, despite its obvious flaws.

What in the world is going on? (pun intended)

No, you haven’t walked into a classroom of some strange flat Earth school, you just walked into a classroom in 15th century Europe. The map in your hands really is the best map available at the time, a map some think was studied by none other than Christopher Columbus in preparation for his journey to the Indies. (Perhaps this map explains how he ended up in the Caribbean instead.)

Ok, now remove yourself from 8th grade.

First things first, we’ve obviously gotten better at making maps in the 500 years since Columbus. But that’s not the point of the story. No, the point is that every other person in your 15th century Geography class, every other person in the world, thought that the map on your desk accurately depicted the globe. They had a completely different belief about the nature of reality than you did. And they weren’t malicious or crazy or dumb (not all of them at least) — they just believed the wrong information.

How is this possible?

Humans rely in the knowledge of trusted people and institutions for facts about the world that they cannot possibly determine independently. It is conceptually similar to Adam Smith’s specialization theory of labor, but transferred into the domain of knowledge. If everyone was required to acquire for themselves, empirically, all knowledge about the natural world, humanity would never progress.

To know what a map of the globe looks like, or to understand the structure of an atom, or the reasons the tides move, it is useful that we outsource our understanding to the expertise of trusted others. In this way we vastly increase the amount of acquirable knowledge. However, we introduce the risk that the person we trust to provide that information might be mistaken in some way that we are unable to detect.

Clearly, the 15th century map maker, no matter how trustworthy, was mistaken, and as a result everyone was misinformed.

Brave New World

Let’s explore another example, this time from Aldous Huxley’s classic dystopian novel Brave New World.

An authoritarian government called the World State rises to power after a chaotic period of war. The controllers of the World State believe that they have discovered how to perfect the human experience– by removing from society all sources of pain and conflict. “Community, Identity, Stability” is the World State motto.

The opening chapters of the book describe the workings of the “Central London Hatchery and Conditioning Center”, a building where new citizens of the World State are born (i.e. removed from a test tube) and raised (i.e. indoctrinated and conditioned according to their pre-assigned role in society).

As you’d expect in a dystopian world, even before they are born, each citizen is assigned to a role in a caste-like system, a role in which they will spend their whole lives. Stability is achieved, according to the World State, by eliminating class mobility. While the fetuses are still in test tubes, the lowest future members of society, the Epsilons, are treated in a way that impairs their physical and mental capabilities, and of course the highest ranking members, the Alphas, are not.

Once born, the psychological and mental conditioning begins– and this is what is most relevant for this essay. Children are indoctrinated into the belief system according to their future roles in society via hypnopedia (learning while sleeping). Each child hears the teachings prescribed by the World State thousands of times over the course of their childhoods.

[N.B. The specific method of indoctrination used by Huxley in Brave New World, hypnopedia, is not proven to work. But exposing people repeatedly to ideas while conscious, is a well-established method for getting people to believe those ideas, as we will see later.]

The result of this mental indoctrination is praised by a World State leader.

“...the child’s mind is these suggestions, and the sum of the suggestions is the child’s mind. And not the child’s mind only. The adult’s mind too–all his life long. The mind that judges and desires and decides–made up of these suggestions. But all these suggestions are our suggestions!” ... “Suggestions from the State.”...”

It goes without saying that the "suggestions" form a model of reality that bears little resemblance to the real world. Again, everyone is misinformed. A citizen of the World State knows things to be true not by any process of conscious thought, or reason, but by what is familiar as a result of repeated exposure.

Two shortcuts to truth

Each story describes a shortcut, or heuristic, humans use to form their beliefs about the world.

The first story about the 15th century Geography class describes how people form beliefs based on the ideas of a trusted source. Truth in this case is a function of trust in the source. The majority of the information we acquire comes to us from trusted sources. Daniel Kahneman describes this model of belief in his interview with Shane Parrish on The Knowledge Project podcast.

“We have beliefs because mostly we believe in some people, and we trust them. We adopt their beliefs. We don’t reach our beliefs by clear thinking, unless you’re a scientist or doing something like that.”

The second story, fictionalized in Brave New World, describes how beliefs form out of familiar ideas. In this case, truth is a function of exposure, the more times a person is exposed to an idea, the more likely it is they will accept it as fact. The formal name for this theory of truth acceptance is the familiarity effect, or the mere-exposure effect. Again, Kahneman aptly describes this method, this time in his book Thinking Fast and Slow.

“A reliable way to make people believe in falsehoods is from repetition, because familiarity is not easily distinguished from the truth.”

Something is missing

You might notice that something is missing from both shortcuts humans use to find the truth– verification that what we've been told is in fact true. Your observation is correct, we don't usually "waste" the time and energy to do so.

The closest we come is to check against our intuitions. Kahneman writes again in Thinking Fast and Slow, "How do you know a that a statement is true? If it is strongly linked by logic or association to other beliefs or preferences you hold..."

In other words, if something feels right, if it fits into our existing worldview, we will accept it as true. If it feels wrong, in other words, if we do not want to believe it, then we will attempt to refute it.

This is why the social psychologist Jonathan Haidt writes in his book The Righteous Mind, "Reason is the servant of intuitions," and, "Reason doesn’t work like a judge or teacher, impartially weighing evidence or guiding us to wisdom. It works more like a lawyer or press secretary, justifying our acts and judgments to others."

Reason, the tool that we might use to verify whether or not to believe something, is employed only after we decide what we want to believe.

The truth at the individual level then is not the result of reasoned deliberation. It is a combination of ideas from trusted sources, and ideas with which we are already familiar, judged by our existing intuitions. This is why bad ideas and misinformation spread so easily. If it fits into our existing worldviews, if it is promoted by someone we trust, if we see it enough times, it becomes the truth.

Do you trust me?

This is why humans are so gullible.